Clinical trial WTF

Some thoughts on a recent clinical trial published in Journal of Clinical Oncology, entitled “Crizotinib in Combination With Chemotherapy for Pediatric Patients With ALK1 Anaplastic Large-Cell Lymphoma: The Results of Children’s Oncology Group Trial ANHL12P1.” The paper is here

So what this appears to be is a trial evaluating addition of crizotinib to chemotherapy for children with this type of lymphoma. Except… it isn’t. If you read on to the Study Design section, you find that “ANHL12P1 was a randomized phase II study with the primary aim to determine the toxicity and efficacy of the addition of brentuximab vedotin (BV; arm BV) or CZ [crizotinib] (arm CZ) to standard chemotherapy.” So that sounds like a three armed trial: standard chemotherapy versus standard + BV versus standard + CZ. But it’s not that either.

What it is, is a mess.

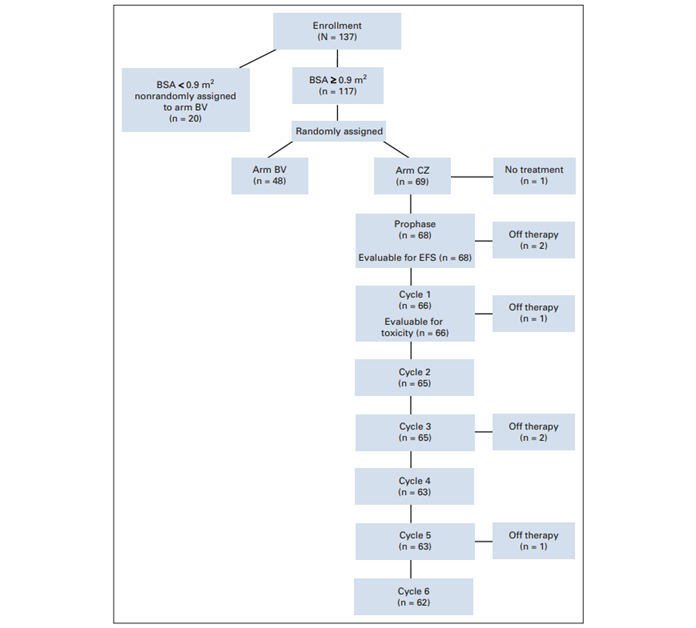

As can be seen from the CONSORT-style flow chart, they randomised between addition of BV and addition of CZ (which may or may not be a sensible comparison; I’m wondering why they didn’t also randomise to standard care). This paper, though, only reports outcomes for the CZ arm. That seems a very odd thing to do. They went to the effort of setting up a randomised trial, but then didn’t compare the randomised arms – which is the thing a randomised trial is designed to do. What’s going on?

This seems to be an example of “single arm thinking.” I keep encountering a pervasive belief that you can get a good estimate of “the” effects of a therapy from just a single-armed trial. That is nonsense: the response rate, event-free survival and so on that you see will vary depending on the characteristics of the patients that you recruit. There is always patient selection. If you recruit a different set of patients, you’ll get a different incidence of outcomes. The belief that there is a single “response rate” associated with the therapy, independent of who it is applied to, seems just wrong. The whole reason we do randomised trials at all is to avoid these biases. We set up a study that doesn’t purport to estimate “THE” effect of a treatment, but compares two or more treatments to assess their benefits and harms compared to each other. A randomised trial is explicitly set up to facilitate unbiased comparison between the randomised arms, so to randomise but then not make that comparison is just really weird.

They did do some analysis of their primary outcome (event-free survival time i.e. time from randomisation to progression, relapse, or death). But the comparison was between the CZ arm and a “historical control” 70% cure rate (doesn’t say at what time point – but 2 years is mentioned later).

There’s some other weirdness too. The CZ randomised arm had 69 patients, but the BV arm only 48. But there were also 20 patients ineligible for randomisation who got BV. I’m really really hoping that they didn’t just add these on to the 48 randomised patients to make a BV arm roughly the same size as the CZ arm. There’s a separate paper on the BV arm but I haven’t dared look at it yet.

This paper was written by people experienced in trials, reviewed by editors and peer reviewers, including presumably statisticians, and published in what is regarded as one of the top journals in the field. Staggering.